This is part of a 3 part series!

Part one: General concepts: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/

Part two: Adversarial examples: https://www.vicharkness.co.uk/2019/01/27/the-art-of-defeating-facial-detection-systems-part-two-adversarial-examples/

Part three: The art community’s efforts: https://www.vicharkness.co.uk/2019/02/01/the-art-of-defeating-facial-detection-systems-part-two-the-art-communitys-efforts/

Introduction

The use of facial detection/recognition systems in public spaces is of great concern to privacy advocates. As the general public gains an awareness of such systems, concern becomes more widespread. Various privacy advocate groups have sprung up (such as Big Brother Watch in the UK) which aim to raise awareness of the perceived loss of liberties. I will not be discussing the accuracy of claims here, nor the ethical implications of mass surveillance (be that for law enforcement, advertising purposes, or other). I will instead discuss the general concepts of these technologies, and some of the interesting attempts being made by individuals and small groups to defeat them.

First off, a clarification is to be made. Facial detection and facial recognition, although heavily overlapping, are different. As the names suggest, facial detection pertains to the detection of a human face. Facial recognition aims to match a detected human face to faces stored in some database. Most of the privacy concerns stem from the use of facial detection in the first place, and so this will be the main point of discussion in this article. Facial recognition systems will of course be touched on, as they sit on top of these facial detection systems. It is also worth noting that I will not be providing deep explanations of the driving technologies here. This article is more about presenting the concepts, than gaining a true understanding of the subject area.

How does it work?

So, how do facial detection systems work? There are numerous different driving technologies that can be used (neural networks, support vector machines) to create systems, which of course interpret images in a very non-human way, because the machines don’t “think” like a human does. In terms of human explanations, we can think in terms of general assumptions being made by systems. The general assumptions contain the obvious: oval head, eyes roughly in middle, nose, mouth below that. There are various permutations possible in these areas, and spacing/colouration can vary greatly (more on this later).

What are faces?

Where do these generalisations come from? Facial detection systems will not be made specifically for detecting faces. The programmer does not explain to the machine what a nose is and where it goes. Without the ability to learn and reason as a human does, it would be futile. Instead, the system is provided with a large set of training data, and it forms generalisations based upon it. For a facial detection system, you would provide it with 1000s of photographs of human faces. Generally, the more pictures you provide, the more robust your system will be. If you provided your system with only 5 pictures it would likely only be able to detect faces that looked like those.

The acceptable error rate also factors quite heavily in the use of facial detection systems (as well as facial recognition systems). If your facial recognition system was trained on exclusively pictures of one person and then attempted to detect the face of said person in a crowd, it would most likely still not be able to achieve 100% confidence in the match. This stems from variance in lighting conditions, angle, complexion, etc. Acceptable levels of error in matches vary, but generally you want at least 90%. The same occurs in facial detection. If you have only trained the system on a few faces it might not be able to generalise enough to detect all human faces, only those that look quite a bit like those in the training sets. This can lead to some big problems in facial detection/recognition systems.

For examples within this article, I will use my face. This is me:

What can a system learn about a face from this photo? It can learn that a human face has two oval shapes in the middle, which are blue. Above these are thin brown strips. The ovals are surrounded by black rectangles which are bridged in the middle, over a slightly-darker bit. To the left of this is an even darker bit. Below this is two light lines, leading to a flat pink oval. Around the top and sides is brown; below is purple. There is also a patch of white above and to the left, and to the right are various curved shapes and lots of brown/grey.

For a start, from one picture, the system has no way of knowing what is a face and what isn’t. It has no concept of a face, it can only generalise from the contents of an image. So we could add another photo of me to our system, with a different background. The system might then be able to “infer” that the background doesn’t matter, as the only consistent thing is the bit in the middle. What if I then wore a different coloured jumper, or took my glasses off? We would need more training data to cover these eventualities. What if I dyed my hair, or changed the style? What if I closed my eyes, or wore some makeup? What if the camera didn’t have a well-lit front-on view of me? To be able to cover all our bases, the system would need a large number of images of me.

This system, however, would still only be able to detect me. Show it another white female, and the confidence of the system in the detection of a face would likely be low because we would likely have varying facial structure. So, we now add a whole bunch of photos of other white women to the training set. If we then show it a man, it may not detect them as a face. So, add some of those. Now we show it different races, and it can’t detect those. Add even more training images to be representative of all different races that the system may encounter.

We now have a system with tens of thousands of different photos as training data. Surely this is enough to have created a robust facial detection system? Unfortunately, no. Although throughout the training set we have been representative of most general facial types that the system will ever see, the system does not manually compare every input image to every single image in its dataset. This would be incredibly computationally complex, and would require large amounts of time, making it highly impractical. Generally, we want our systems to work in real-time. So, as previously mentioned, generalisations need to be made.

Eigenfaces

To summarise these massive image sets, composite images known as eigenfaces may be generated. These are generally used for facial recognition, but can also be applicable for facial detection. Example Eigenfaces can be seen here:

Source:https://commons.wikimedia.org/w/index.php?curid=442472

Although slightly creepy looking, you can see that these eigenfaces feature general human features. You can see the eyes, nose, cheeks, mouth, and general facial shape. These provide a neat way to summarise human appearances. A person could look 54% like the first image, 2% like the second, 88% like the third, -40% like the fourth, etc. This means that the deployed system does not need to store images of every single person to be recognised- they can each be represented by a series of numerical values. When an image is presented for facial recognition it can then be computed against the eigenfaces being used by the system to gain numerical values as seen above. These values can then be compared to the values of all enrolled faces to check for a match (allowing some margin of error). This technique also adds for a layer of security in the event that the system is compromised- it means that an attacker is not able to recover a complete set of faces which are enrolled in to a system, which could make people targets if the system was being used for access control to somewhere particularly interesting. Facial detection can be performed similarly using eigenfaces.

The problem of faces

The problem is, human faces vary quite a lot. It appears in the media every now and then that someone made a racist computer because it excels in recognising white males. There tends to be two overarching reasons for this, although quite often commentary is provided blaming a third reason. The first reason is that, frankly, quite often white men are making these systems. I’m not going to get in to gender/race balances in STEM subjects here. Much like I have used my own face in this article, it’s easy for researchers/developers to use their own faces in systems. A system trained on white male faces is going to do better at detecting white males over any other race/gender.

Is this still a problem at scale? In a word, yes. There are numerous training data sets available online. These sets are often carefully hand curated by people to meet some criteria: image size, lighting, angle, etc. Quite often though, they are not the most diverse. If you feed a system 9 images that look like one thing and 1 image that looks like something else, a generalisation will look a lot more like the 9 than the 1. This isn’t something that the developers of facial detection systems can help too much. Often the people crafting the training image sets are not the ones crafting the algorithms to make use of them. If more representative training image sets are available, then there should be less of an issue in this particular tract of the problem.

Another area of issue stems to facial dimorphisms. These can be both by choice, or by genetics. Systems tend to find male faces easier to detect that female faces. One of the reasons for this is the increased variance in female appearances, i.e. makeup. Training sets of large amounts of women will likely contain quite a bit of variance in facial colouration- one woman might prefer blue eyeshadow, one gold. One might have black lipstick and a lot of eyeliner, another might wear no makeup. As men tend not to wear makeup, there tends to be less dramatic variation (facial hair is of course highly variant, but the range of colours tend to be less so). This variance makes it less easy to generalise on the appearance of a female face, thus making it harder for systems to match a new female face to a generalised image.

The genetic side of facial dimorphism is also one that causes issues. Even if skin tone is completely removed from the equation, facial structures vary by race. If the system is trained on an image set that primarily includes people with Caucasian bone structures, it will be less likely to detect other bone structures. This ties back in with the issue of training data, but even a completely balanced training data set would cause issues. As with makeup, there is so much variance that forming generalisations is hard. Studies have found that a system trained on images of black people will do well at detecting black faces, but struggle with white ones. Conversely, a system trained on images of white people will perform well at detecting white faces, but poorly for black faces. A system trained on both white and black faces will detect both black and white faces at lower rates than a system trained on a singular race/gender.

The third option for facial detection systems struggling that is often given is “programmers are racist”. I have previously had a conversation with a self-proclaimed expert on the subject at a conference about how no biases exist in training sets, and that algorithms CAN generalise perfectly. Instead, the issue stems from programmers all being subconciously sexist and/or racist, and so making their programs sexist and/or racist. Seriously. I won’t provide my thoughts on the matter here, but I’m sure they can be inferred.

There’s no silver bullet to fixing this issue. However, the use of eigenface-like concepts to generate multiple different general human facial images can be beneficial, as can the simultaneous running of multiple algorithms, each targeted towards a different demographic. This vastly increases computational complexity and processing time however, and so may be impractical.

What generalisations are being made about the face?

Let’s go back to the previously used picture of myself.

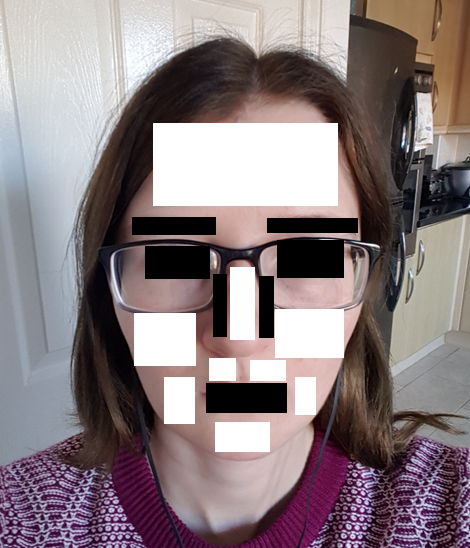

In my face there are various features, and there are various featureless spaces. These can both be of use to a system. Areas of the face can be interpreted in terms of light and dark. My eyes, eyebrows, nose sides, and lips all are darker than their surroundings. Conversely my forehead, cheeks, nose bridge, and chin all catch the light. This can be summarised thusly, although there is course much more granularity to it in reality:

These general balances of light and dark are foundational in the generalisation of human faces for detection and recognition. Look again at the colourless Eigenfaces:

Source:https://commons.wikimedia.org/w/index.php?curid=442472

You can see here how the general concepts of the facial features can be represented through these light and dark patches. This forms one of the most commonly used methods of defeating facial detection systems: artificially manipulating the light and dark balances of faces.

In my next post, I’ll discuss the actual efforts to defeat facial detection systems.

[…] The art of defeating facial detection systems: Part one […]

[…] read the first part (which describes how these systems generally work), it can be found here: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/. The second part (which discusses adversarial examples for facial detection/recognition […]

[…] systems and how to defeat them, which can be seen here: Part one: General concepts: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/ Part two: Adversarial examples: […]

[…] is largely built on the information detailed within these blog posts: Part one: General concepts: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/ Part two: Adversarial examples: […]

[…] in learning more about dodging facial detection/recognition sytems? Read on here: General concepts: https://www.vicharkness.co.uk/2019/01/20/the-art-of-defeating-facial-detection-systems-part-one/Part two: Adversarial examples: […]